Autopilot

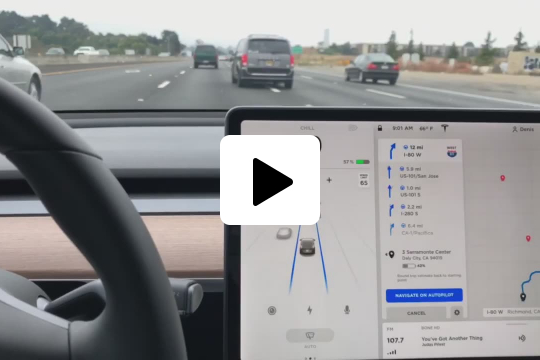

Tesla Autopilot enables your car to steer, accelerate and brake automatically within its lane. As a driver, you’re required to supervise Autopilot’s decisions. Its main features are Navigate on Autopilot, to suggest lane changes to optimize your route; Autosteer, which keeps on being improved to navigate more complex roads; and Smart Summon, which will take your car to you in a parking lot, maneuvering around objects as necessary.

Tesla cars come with standard advanced hardware to provide Autopilot features today and full self-driving capabilities in the future. You can learn more about Tesla Autopilot in their website https://www.tesla.com/autopilot.

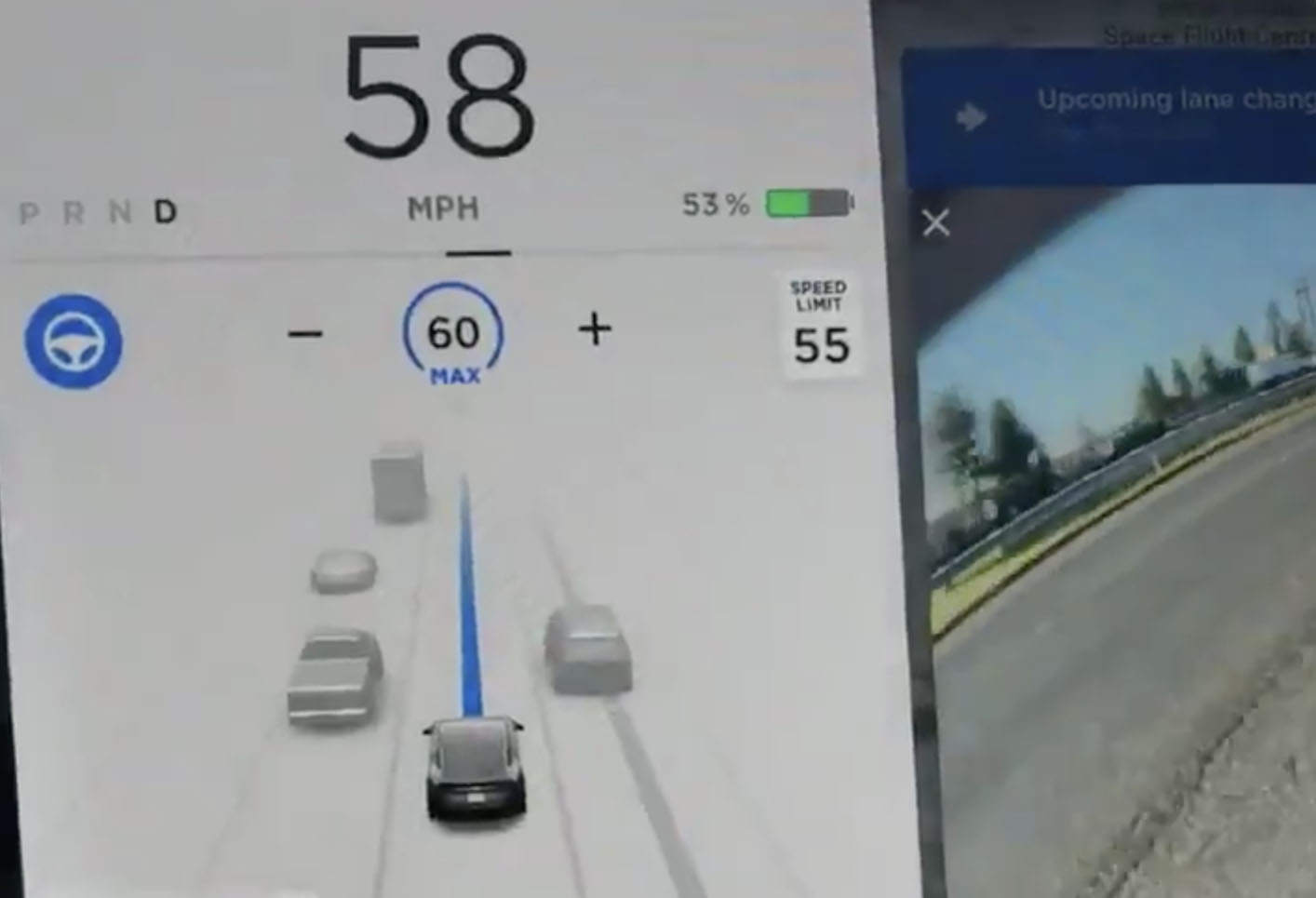

Pictures of Autopilot

Blog posts related to Autopilot

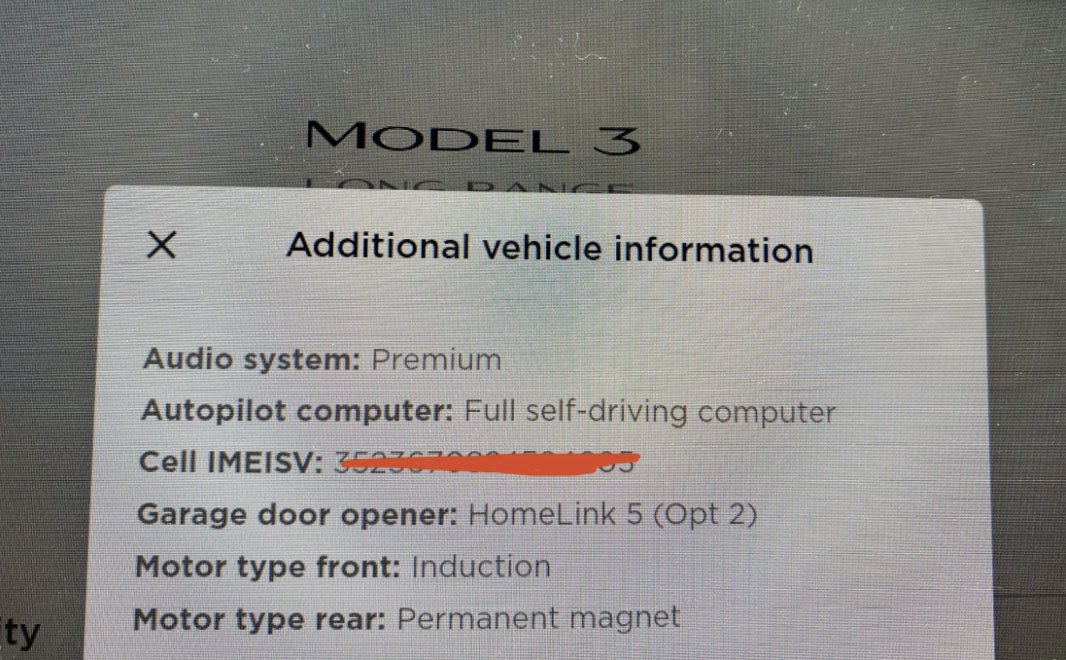

How to tell if a Tesla has Full Self-Driving (FSD)

To know for sure if a Tesla has Full Self-Driving (FSD) either go to the Tesla App and tap on 'Upgrades' or check the 'Additional Vehicle Information' under Controls > Software in the car's touchscreen.

Autopilot, Enhanced Autopilot, and Full Self-Driving features and cost

Are Autopilot and Enhanced Autopilot the same? What about Full Self-Driving? How much does it cost to upgrade to FSD? How do I know what I have in my Tesla? Learn more about Tesla's AP, EAP, and FSD and how to upgrade.

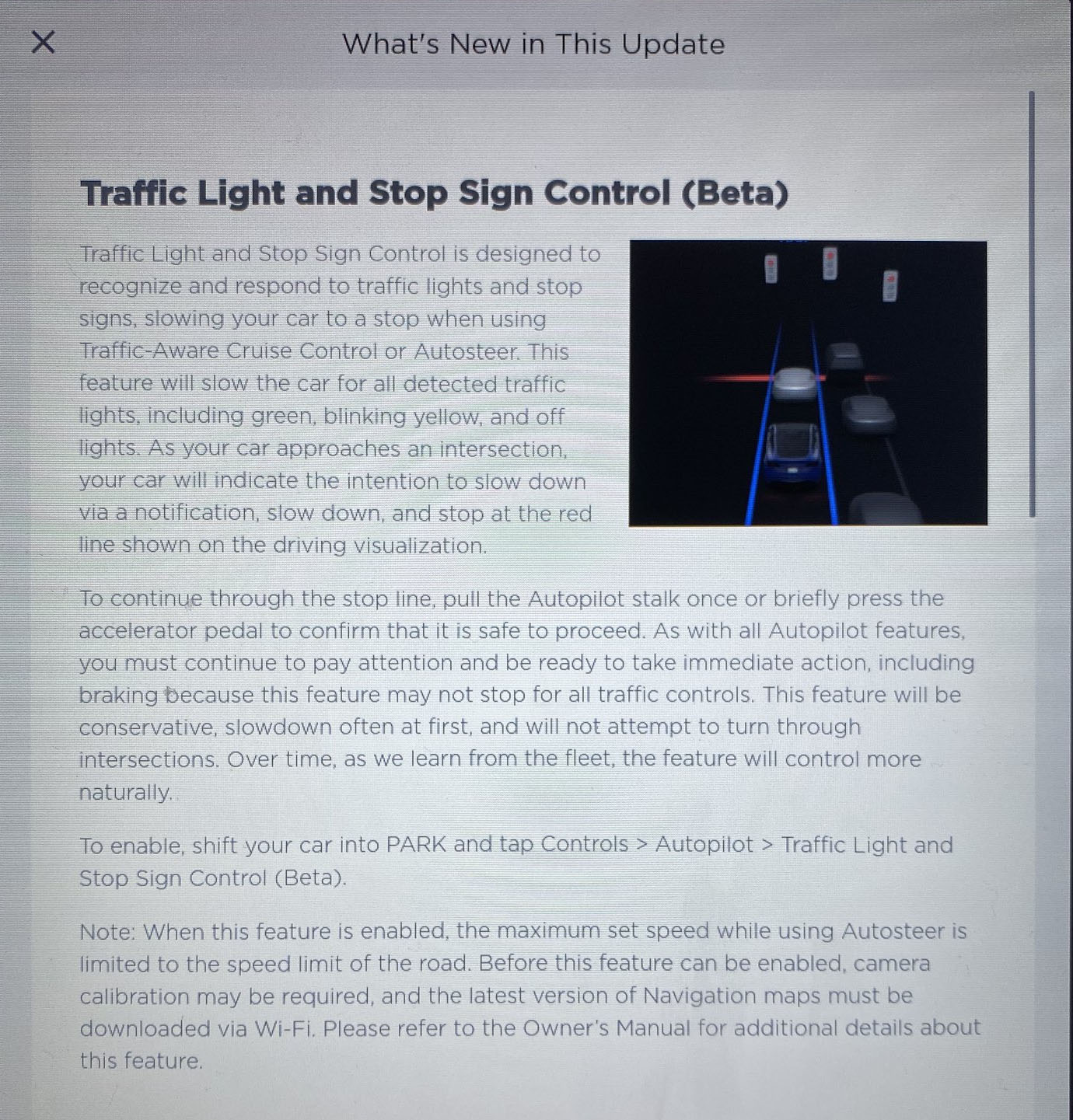

What's in Tesla's software update 2020.12.6

Tesla's latest software update, 2020.12.6 brings everyone a new and very much expected feature: Traffic and Stop sign control. This new feature requires Autopilot HW3 (Full Self-Driving) and enables the car to stop at traffic lights and stop signs by itself. Read more and see release notes here.

[New feature] Tesla's Traffic Light and Stop Sign Detection

Tesla has started rolling out an Early Access only version, 2020.12.5.6, which introduces Traffic Light and Stop Sign Dection in beta. I've had access to a few videos source of a Model 3 running 2020.12.5.6 from an anonymous and I want to share with you what I think is interesting.

Do I need MCU2 to upgrade to Hardware 3 (FSD)?

With the Infotainment upgrade available (MCU1 to MCU2 retrofit), Tesla owners with MCU1 who purchased FSD are wondering if MCU2 is going to be required and if they're going to have to pay for it.

Exclusive look at Enhanced Summon and Autopilot coming in v10

I've had access to a few videos of a Model 3 running 2019.28.3.11 - an Early Access only release. I want to share what I think is interesting in these videos with you. These new videos showcase a few features that I expect will be released to the general public under version 10 of the firmware.

Videos about Autopilot

Past Tesletter articles

'CityStreetsBehavior' found in the firmware

With 2019.40.1.1 Tesla pushed code to our cars that they are not currently using for the public. According to @greentheonly, there’s code tagged as “CityStreetsBehavior” which is brand new. In a follow-up tweet, he mentioned that most of its logic is missing and some comments indicate it’s targeting HW3, which isn’t a surprise since city streets will be only for folks who purchased FSD, all of which should get the AP processor swapped in the next few months.

Read more: Twitter

From issue #882019.20.4.4 and I can now take a 90 degree turn on AP

The Tesla AP team keeps improving the software with every release, impressive video of a Tesla taking a 90 degree turn that the car could never do before.

From issue #682020.12.6 is here and it brings as all Traffic lights and stop sign detection

Ok, not all of us, only those of you with FSD. But it’s here for everyone and it’s really exciting. While this isn’t fully autonomous, the idea of the car stopping by itself and restarting when the driver says so sounds pretty good to me! Remember to be in control of your car at all times.

From issue #1092021.4.18 brings animal assets to the masses

The latest software update also brings cats and dogs visualizations to our cars. Some owners also claim to have seen colored cars for a split second. Or maybe it was a dream about the future?

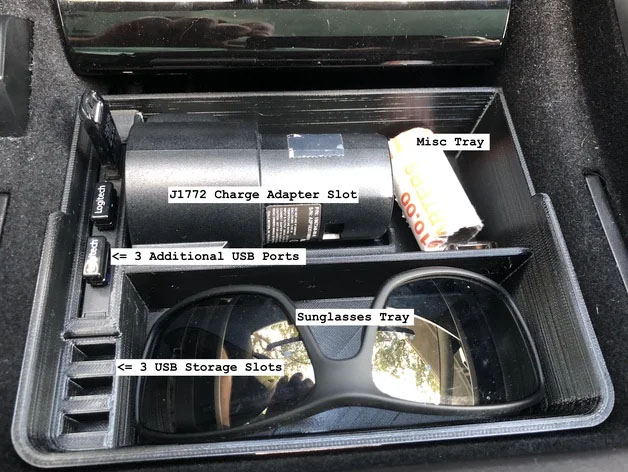

From issue #1643D printed Tesla Model 3 storage tray with a built-in USB hub and J1772 adapter slot

I’m not sure how useful it is to have the J1772 adapter right there in the console - probably it depends on how much you use it - but I like seeing people designing these things

|

|

Read more: Reddit

From issue #80[Video] AI for Full-Self Driving by Andrej Karpathy

Earlier this year, Andrej Karpathy talked about AI for Tesla’s FSD at the ScaledML Conference. It’s not the first talk by Karpathy that we share, you know we’re big fans. In this one, he discusses some of the work being done at Tesla around AI and Vision to improve FSD. In particular, he talks about how they’d like to train neural networks to do the planning and the prediction of the bird eye view inside the network itself vs. them being explicit predictions like they are today.

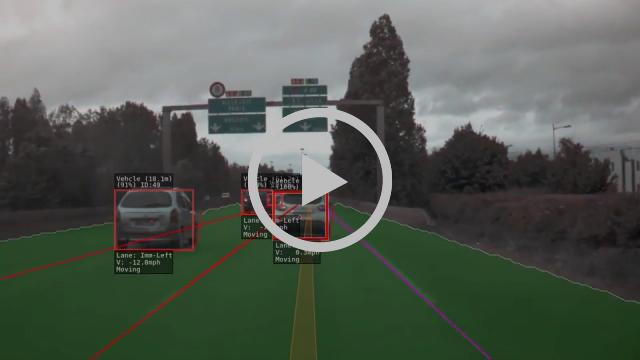

From issue #108[Video] Traffic light and stop sign detection

A few days ago, Tesla started rolling out a new version 2020.12.5.6 (EAP only) introducing the expected new Traffic light and stop sign control feature. It’s looking pretty good although it’s still not a final product e.g. it will stop at all traffic lights, no matter if green or red, unless you override it. In our opinion, Tesla is being conservative with the rollout which is good because it’s a matter of safety. In case you missed this, check out these videos we shared to see it in action.

From issue #108Airplane vs. Tesla Autopilot

Ryan is an airline pilot and flight instructor, as well as a Model S owner. In this episode of Supercharged Podcast he compares his experience with autopilot in airliners and Teslas.

Read more: Supercharged Podcast

From issue #10Alphabet's Waymo and Tesla's Autopilot FSD make a trip to the store

Both cars make it to the store with zero disengagements. Tesla’s FSD Beta makes the exact same trip in almost 3 minutes less. It would be great to see the same drive during the daytime with some traffic.

From issue #154Andrej Karpathy talks about AI at Tesla

Here are some highlights of Karpathy’s talk about AI at Tesla at PyTorch Developer Conference. Having said that, I encourage you to watch the entire video, he makes a great job explaining this complex subject.

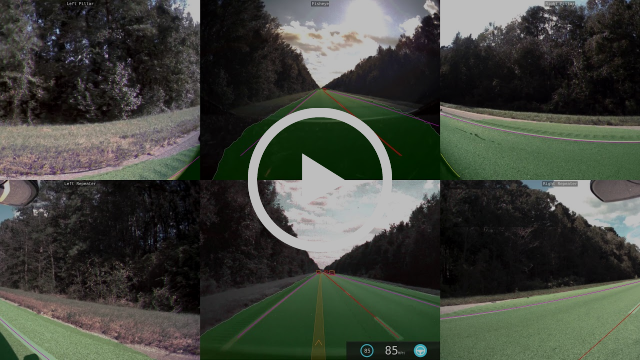

- [3:45] Cameras get stitched together to understand the layout around it

- [4:00] The images get stitched up to create the Smart Summon visualization. It reminds me of old strategy games when, under the fog of war, you keep discovering the map as you move forward

- [5:00] Three cameras predicting intersections

- The Autopilot has 48 neural networks, that make 1,000 distinct predictions, and takes 70,000 GPU hours to train them. It would take one node with eight GPUs you will be training for a year

- None of the predictions made by Autopilot can’t regress. To make sure that doesn’t happen, they use loop validation and other types of validation and evaluation

- They target all neural networks to the new HW3 processor

- Project Dojo: Neural Network training computer in a chip. The goal is to improve the efficiency of training by an order of magnitude

- 1B miles on NoA and 200,000 automated lane changes, and 800,000 sessions of Smart Summon

Announcements from the Tesla Q2 2018 results conference call

We don’t cover Tesla’s stock, financial results, or other stuff that isn’t their product but this time during the Q2 call, they made really cool product announcements:

- v9 would include on-ramp off-ramp AP with automatic lane change when possible

- Hardware 3 will be introduced next year. According to the announcement, the new hardware is a chip designed in-house. This chip is designed explicitly for neural network processing. There are drop-in replacements for the Model S, Model X, and Model 3. According to Elon, the current Nvidia hardware can process 200fps while this new one can process over 2000fps.

- The coast-to-coast trip on AP won’t happen soon. The AP team is focused on “safety features” like recognizing stop signs and traffic lights in a super reliable way. They don’t want to distract the team or do it with a predefined hardcoded route, so it’s going to have to wait.

Antioch, CA through the eyes of FSD Beta

I love projecting the FSD visualization on top of what he is recording. Really nice video.

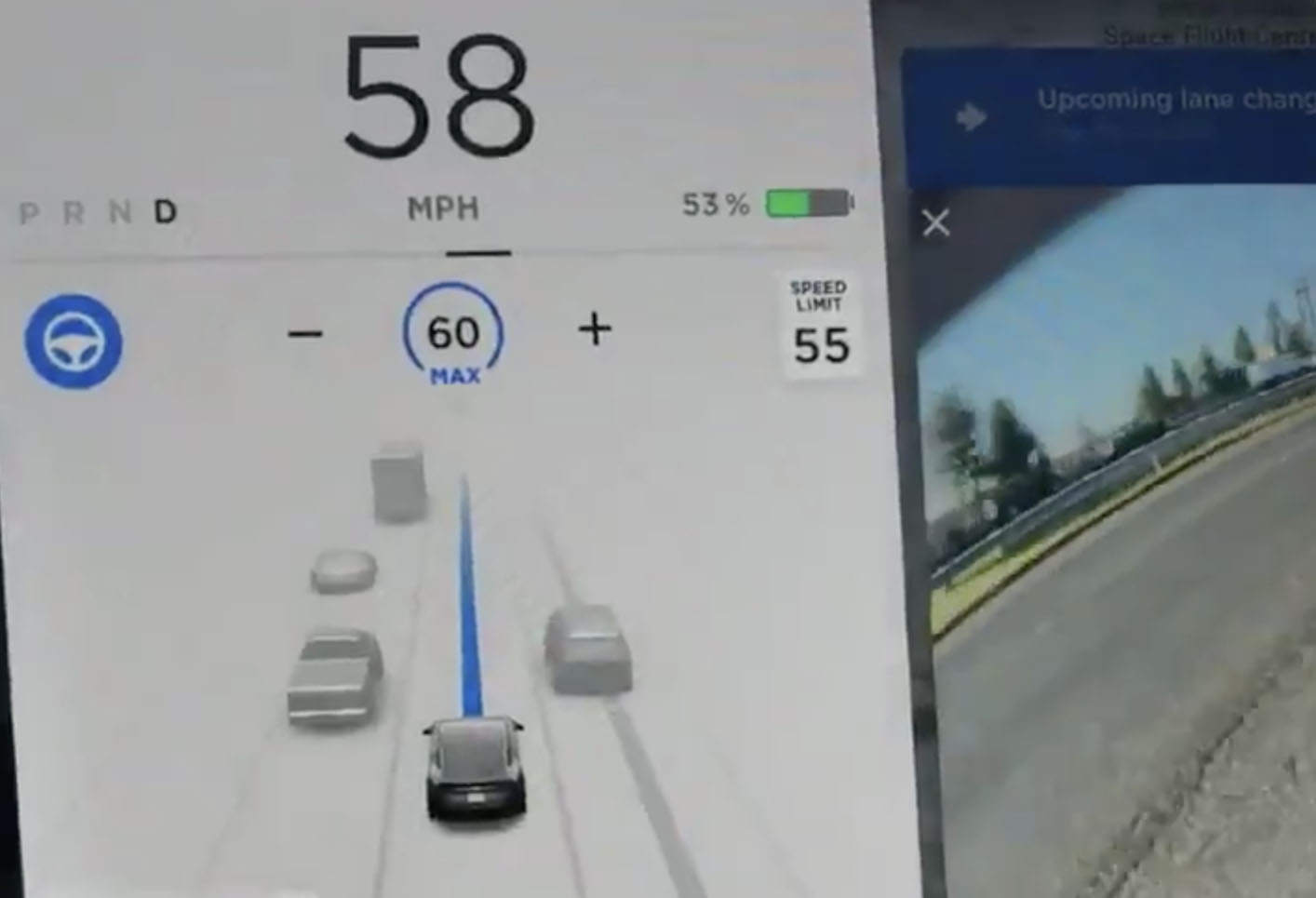

From issue #157AP improvements on residential roads with 2020.28.5 update

Cool video by ‘Black Tesla’ showing Autopilot on residential roads with the latest update 2020.28.5. The update introduces the ability to increase the speed limit up to +5 mph when ‘Traffic and Stop sign detection’ is enabled to make the driving experience more natural. And looking at this video, it seems like it does the job!

From issue #122AP to make lane changes automatically on city streets

It seems like AP can already make automatic lane changes on city streets in alpha. Production release coming up in ‘a few months’ (in Elon’s time 😉). So exciting to see this happening and our cars becoming smarter!

From issue #121AP2 allowing changing lanes in roads that weren't available before

Multiple people have reported this in Norway, Finland, and the Netherlands.

Read more: [TMC Forum](https://teslamotorsclub.com/tmc/posts/2761508/) From issue #10API and AP2 comparison

Chillaban compares API and AP2 running version 2018.21.9. He summarizes his points saying “Overall, my experience so far is that without a doubt in my mind, AP2 is more capable than AP1 now in 2018.21.9 and beyond”.

Read more: TMC Forum

From issue #15Autopilot '4D' rewrite

Tesla has been talking about an Autopilot rewrite for a while now, which would presumably use 4D vision (instead of 2D). Elon has recently shared a new timeline on Twitter:

- Private beta in 2-4 weeks

- Public beta (Early Access Program) in 4-6 weeks

- Tesla owners in the US in mid-December

In case you don’t know, Elon and Tesla are calling 4D vision to the process of stitching all cameras together in motion, with the 4th dimension being time.

From issue #129Autopilot 2020.36.11

Testing the latest Autopilot update 2020.36.11. Chime on green, stopping for red lights and challenges with lightning, beeps when driving by people, smoother freeway exiting, keeping in the center of the lane in city roads without lights, and in the freeway with blinding lights, and more! Overall, 💪 progress.

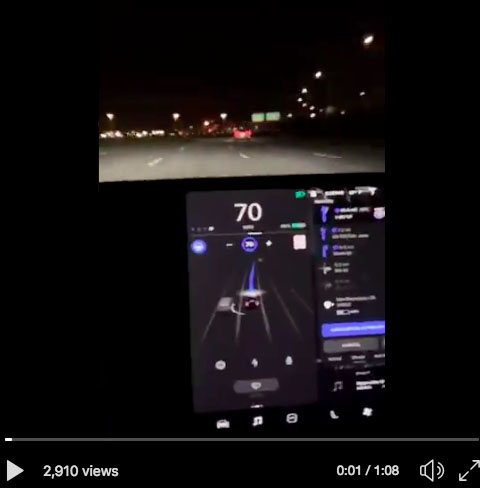

From issue #131Autopilot assist activated

Last week, @greentheonly found a huge list of FSD settings that are not available for drivers to configure. This week, he shows us the ‘Augmented Reality’ view- an overlay layer on top of what Autopilot sees. Check out the cool pictures and videos.

From issue #142Autopilot available to purchase for $2,000 until July 1st

Autopilot is available at $2,000 via Tesla in-app purchases until July 1st. In addition, if you have EAP, you can also purchase FSD for $3,000 until the same date. After July 1st, FSD price will go up to $4,000 for owners with EAP.

The question is, should you get AP or FSD? If you ask me, I think Autopilot is worth it if you have a long commute o travel often in your Tesla. It definitely makes driving safer and more relaxed- or less stressful, depends on your personality and how much you like to drive. Basic Autopilot does not include Navigate on Autopilot, lane changes, summon, Autopark, or traffic and stop sign control. If those are the features you’re looking for, then go for the FSD before its price increases.

Read more: Twitter

From issue #117Autopilot avoids bumper in the middle of the road

Luck? Maybe. Maybe superpowers. Really cool to see Autopilot swerving out of the way and not hitting the bumper.

From issue #176Autopilot HW4 and future retrofits for HW3 cars

During the investor call, Elon Musk mentioned that HW4 for FSD will launch with the Cybertruck. He also noted that offering upgrades from HW3 to HW4 will not be necessary as long as HW3 can surpass the safety of a human driver. In Elon’s words, “If HW3 can be, say, 200% or 300% safer than humans, HW4 might be 500% or 600% safer.”

From issue #252Autopilot HW4 will be coming next year in the Cybertruck

As someone who has driven on the hills of Berkeley, it is amazing to see the car handling these situations!

From issue #178Autopilot is doing tire thread depth estimation

Greentheonly reported that someone discovered that Autopilot is doing tire thread estimation, probably to understand if the weather conditions, speed, etc. are safe with the current state of the tires.

From issue #156Autopilot may soon render all Teslas with their own model and color

It seems that soon - ElonTime™ - Autopilot may render all Tesla models with their own model and color instead of showing an image of a generic sedan/ SUV. TBH, I was expecting that to be the case when I first got my Model S almost three years ago. Cybertruck would get its own renderization as well.

From issue #123Autopilot recognizing a dog as a pedestrian

In this video by greentheonly, Autopilot recognizes both the dog and the owner. Even though it incorrectly tags the dog as a pedestrian, note that it does get recognized so that’s what matters in order to be able to avoid it.

Looks pretty elaborate and not a single frame fluke? Or am I imagining things?

— green (@greentheonly) November 11, 2019

Somebody, walk your dog in front of your car and see if it shows up on IC to test (put car in D)?

All cams but pillars it seems. pic.twitter.com/8u846Vsq1A

Autopilot rewrite with 3D labeling is only starting to use FSD computer’s potential

In the recent Elon Musk interview by Thirdrow Tesla Part 2, we learned that the Autopilot team is going through a foundational rewrite that goes from different networks for different cameras to combine all of them, planning, and perception. This reddit post goes over why this is a big deal and why the change was needed.

Read more: Reddit

From issue #98Autopilot saved this owner's Model 3

Video of a Model 3 on Autopilot- according to the driver- avoiding a pickup truck. You can see the almost incident from two different angles. That was close!

From issue #125Autopilot speed limit testing

Tesla Tom brings us this Autopilot version 2020.44 speed limit testing on single and multi-lane roads with both fixed and offset speed limits. It looks like on multi-lane roads AP still doesn’t apply the changes automatically, but if you push them manually- the car recognizes the speed limit change and you can tap on it on the screen- AP will match it appropriately. On a single lane road AP does automatically match the road speed when it drops (not when it increases, in which case you have to push it). All in all, still room for improvement, but feeling good about this!

From issue #137Autopilot stopping is GPS based with vision assist 📷

Greentheonly got access to the manual from one of the betas which contains a section called ‘Stopping at Traffic Lights and Stop Signs’. Currently, Teslas with HW3 and FSD-preview display stop signs, traffic lights, and other road marking. However, in this new beta, when you are using Auto Steering or Traffic-Aware Cruise Control, the car actually stops for them too.

Taking another step towards complete FSD, Tesla has decided to release it in a way that still needs the user input to go through the intersection. When approaching the signal the car slows down, even if the traffic light is green, and for it to continue through the intersection you must press down on the gear lever, Autopilot stalk (S and X), or the accelerator. The notes from the manual also highlight that if you are in a turning lane you need to perform that maneuver yourself

From issue #105Autopilot team keynote

Ashok, Director of Autopilot at Tesla for over three years now, seems to have taken over at presenting at conferences from Andrej. In this keynote, Ashok talks about many things, including Occupancy Networks, which is Tesla’s solution to predicting free space on the road. Really interesting!

From issue #230Autopilot team worked last weekend to do a limit release of 10.12

And hopefully, if everything goes well with the limited release, they will do a point release (10.12.1) and broaden the release.

From issue #216Autopilot thinks the smoke-obscured sun is a big yellow light

Full self-driving has gone a long way but it’s still a few edge cases away from its final version. In this video, you can see how AP identifies the sun (obscured by the smoke) as a yellow traffic light. Now that Tesla has the info needed to train the system on this, I’m sure it will get fixed soon.

From issue #126Autopilot usage survey

Are you curious to see how other Tesla drivers use the AP? BostonGraver has put together a survey to collect this data anonymously. Results are public:

More: Survey

From issue #9Autopilot vs Fog - Sydney to Wollongong - Australia

Tesla AP handling the fog like a boss thanks to the combination of cameras and front radar.

From issue #64Autopilot will route you automatically based on the time of the day and what's on your calendar

This isn’t new and, in fact, Elon mentioned it a couple of years ago (worth knowing that it is still in his head!). It would be even better if it’d do it based on past patterns, e.g. every morning I first drop off my kid and then I go to work, wouldn’t it be awesome if my Tesla just knew that and routed me automagically? 🎩

From issue #159Autopilot/FSD information from Q4 2018 earnings call

u/tp1996 put together a list of what was discussed:

- NoAP - Option to disable stalk confirmation coming in a few weeks.

- Traffic Light and intersections - Currently working at about 98% success rate. Goal is 99.999%+.

- Stop sign detection - Elon said this was very easy and trivial as it can be geo-coded, and stop signs are very easy to recognize.

- Navigating complex parking lots - Includes parking garages with heavy pedestrian traffic.

- Advanced Summon - Will be first example of car being able to navigate complex parking lots. ETA “Probably next month".

Read more: Reddit

From issue #45Bikes, speeders, and construction with FSD Beta & a trip to Santa Cruz without interventions

We love FSD Beta videos, here’s two more from last week:

- Model 3, version 2020.44.10.2. Watch it handle bikes, speeders, and construction like a champ!

- Model 3, version 2020.40.8.13. A trip from Redwood City to Santa Cruz (California) on HWY17 without interventions!! 🤯

Bypassing the Los Angeles rush hour commute, at 116 MPH, underground

I can’t wait for a solution to end the soul crushing heavy traffic!

From issue #65Cars with AP2.5 will be able to upgrade to AP3 and get the FSD Subscription

The FSD Subscription is supposed to land sometime in May and Elon just confirmed on Twitter that cars with AP2.5 will be able to subscribe and get the retrofit to AP3. What he didn’t mention is if there will be some type of minimum subscription required. We should know soon!

From issue #161Cross-country drive with Cybertruck

Friends from the East Coast, after the exhibit in LA a couple of weeks ago, the Cybertruck is coming to you. Or so has said Elon in reply @InSpaceXItrust on Twitter. “We will aim to do a cross-country drive with Cybertruck later this year.”

From issue #119Cybertruck AP repeater camera

A lot of folks were saying that the Cybertruck didn’t have any Autopilot cameras but Robert from Talking Tesla snapped a picture of them.

Read more: Twitter

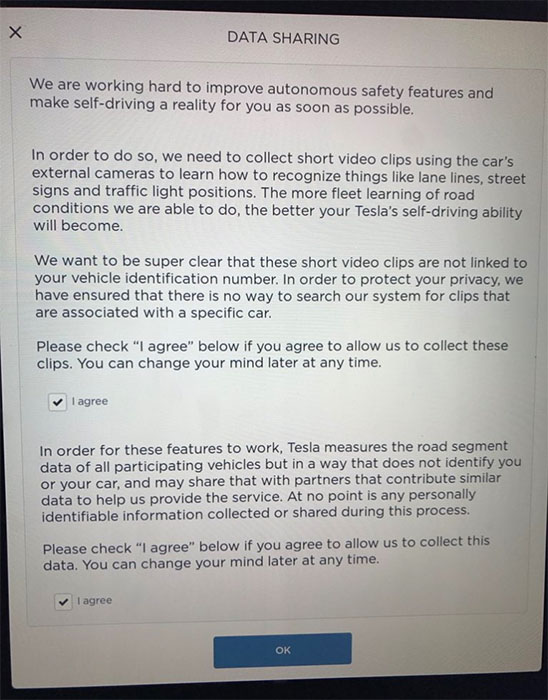

From issue #87Data sharing and privacy - what's actually collected?

By verygreen: The autopilot trip log contains coordinates of your trip placed into buckets of whenever autosteer was available, used and so on. (…) What other things I have noticed: the anonymization is actually pretty superficial.

Read more: TMC Forum

From issue #16Deep-dive into Autopilot Shadow Mode

Since there is a lot of misinformation about how Tesla’s “tesla shadow mode” works, verygreen went on a Tweetstorm to try and throw some light about what it does do and doesn’t. In a sense it’s more advanced than what people think -lots of rules and triggers- but not what other people thought -like not constantly monitoring what the driver does and communicating discrepancies to Tesla. Pretty informative read, as always, from verygreen.

Read more: Twitter

From issue #47Early FSD drives 2020.40.8.11

By the time you read this you’ll probably have watched a bunch of FSD videos but here are my favorite ones so far (apart from the ones I already shared last week):

- FSD in action followed recorded by a drone, pretty cool. Gives a different perspective and you can clearly see what works and what doesn’t (yet)

- FSD yielding for oncoming traffic then crossed the centerline to go

- This thread contains videos of FSD handling turns on city streets, speed bumps, roundabouts, and more!

- FSD 360° view, you can (and you should) move the image around while watching

- Keep an eye on this one since he plans to retake the loop as FSD updates

Elon Musk talks Autopilot (amongst other things) with Lex Fridman

Another (3rd) interview of Elon by Alex Fridman. The interview covers a lot of ground, Tesla and non-Tesla related. Here are some interesting bits that are Tesla related:

- There is a major effort to reduce end-to-end latency and variability in latency from image sensor inputs to control outputs.

- In the future, the system will consume raw outputs from the image sensors.

- Some parts of the systems are still operating on single cameras, those are in the process of being replaced by cameras of all cameras.

- Tesla is working on improving ‘the memory’ of the system, aka what the car saw just a few seconds ago.

Enhanced Autopilot is back as an option (in Europe)

It seems that our friends in Europe can now buy EAP again. EAP offers all the same features that FSD offers at the moment in Europe, this is a really good bang for your buck.

From issue #150Enhanced Autopilot now available for purchase for $6k

The best bang for your money Autopilot package is now back for all cars in all (most?) countries. In the US, you can add it to your Tesla for $6k, this would give you all the features that the FSD package offers today, except for traffic and stop sign control, potential access to the FSD Beta, or in the future, autosteering in city streets. If you purchase this package, you can add FSD for $6k until Tesla raises the price again, or subscribe for $99 / month. You can buy it directly on your Tesla app, under Upgrades, Software Updates.

From issue #222Europe considers relaxing self-driving laws under pressure from Tesla

It’s known that Tesla’s Autopilot doesn’t work as well in Europe as it does in the USA, the reason is that regulators in Europe have some absurd rules. For instance, they have limited the radius that Autopilot can turn the steering wheel resulting in the car asking the driver to take over immediately in the middle of turns, or they have limited the amount of time that the car can engage the blinker to change lanes.

Honestly, all these sound absurd and in the case of the steering wheel limitation even dangerous. That is why I hope, as the article says, regulators in Europe relax their laws soon. The article is behind a paywall if you want to read it in full, go here.

From issue #82Example of emergency lane departure avoidance working as intended

Onwer ended up with his head in the clouds (his words, not mine) for a moment, and didn’t notice how the road was curving. The emergency lane departure feature saved him from an expensive repair.

From issue #62Explanation of electric assisted steering by BozieBeMe2

BozieBeMe2 says: “The electric steering is nothing more than a servo system. Just like in a robot. (…) When you intervene the computers commands, by offering a resistance in that movements, the amp loads go up in the servo and tells the AP computer to cancel the AP sequencing. It’s the same thing when a robot in the factory strikes some thing, the amp loads go up and the robot faults out.”

Read more: TMC Forum

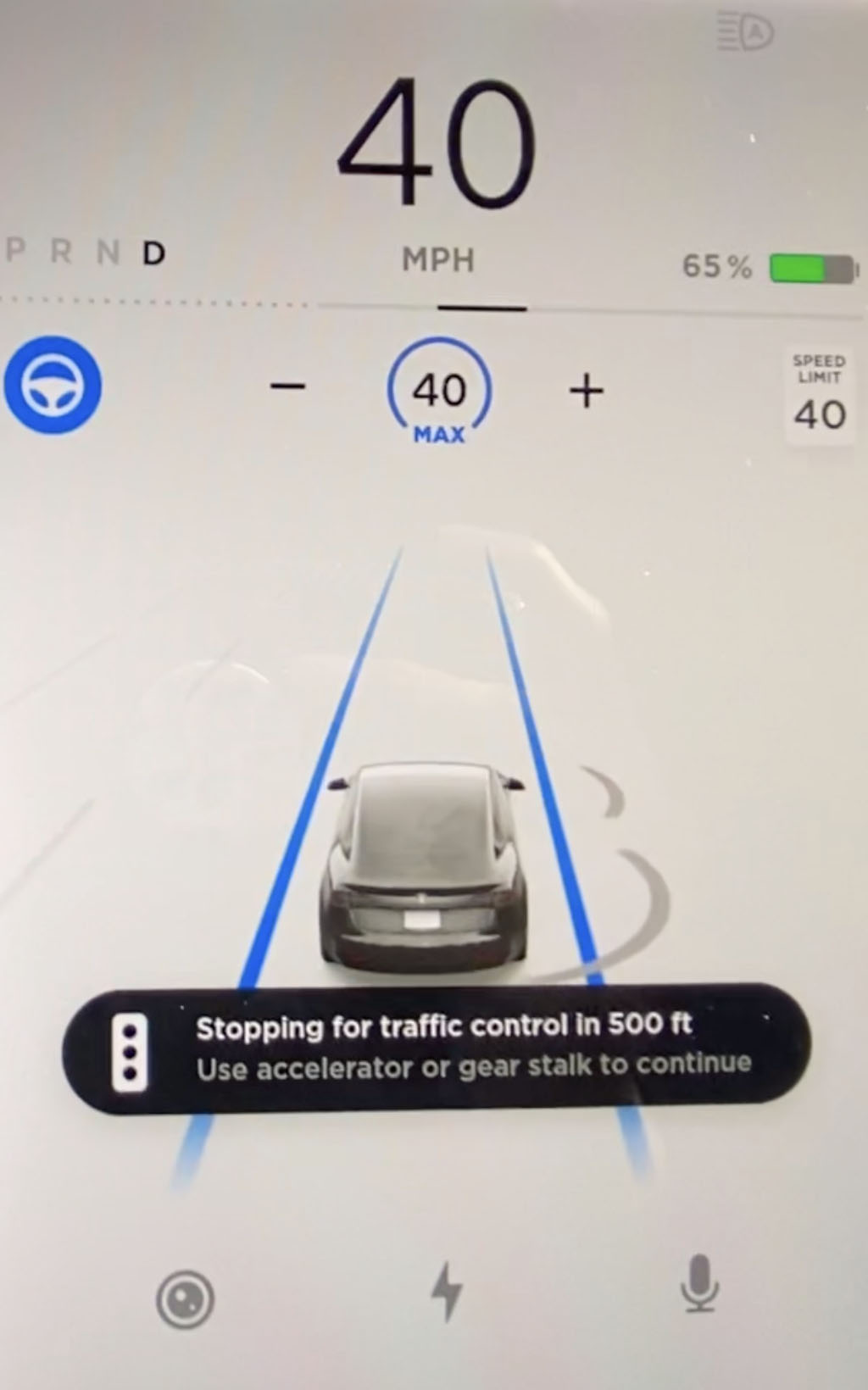

From issue #15First look at Autopilot stopping at red lights 📹

This is the beta that we just mentioned in the previous article, in action. Check out this Model 3 stopping at traffic lights and take a look at the message when approaching an intersection. Only available to a really small group folks in the Early Access program for now.

From issue #105First look at Tesla’s latest Autopilot (2.5) computer in Model 3, S, and X vehicles

The user Balance reports seeing the following:

- Two Nvidia GP106-510 gpu’s. The GP106 is used in the GTX 1060 (GP106-300 for 3GB and GP106-400 for 6GB). Looks like a very highly binned version

- One Nvidia Parker SoC (need better photo) with four 8 gigabit (1 gigabyte) SK Hynix DRAM chips for a total of 4 GB DRAM

- Fairly large Infineon and Marvell chips u-blox Neo-M8L GNSS module (GPS/QZSS, GLONASS, BeiDou, Galileo)

Read more: Reddit.com

From issue #1First look at the FSD Beta

As promised, the FSD Beta arrived yesterday night to the cars of some lucky Tesla owners. In this fun video, @tesla_raj and John from @teslaownerssv recorded their first impressions. Musk hasn’t even waited for 24h to share that the price of FSD is going up. Next Monday, the FSD price will go up by $2,000. Finally, here’s a recap where you can see a few more videos as well as the recap. Enjoy!

From issue #134FSD Beta 10.11.12 Autopilot max speed increased to 90mph

Since Tesla shifted to a pure vision system, they reduced their AP max speed to 80 mph from 90 mph from the previous system. Well, it seems that the newer version just got it increased back to 90 mph.

From issue #210FSD Beta 10.12 will increment the max speed for Autopilot

Even since Tesla introduced their Autopilot version that was a vision only, they reduced the max speed of the system from 90mph to 80mph. Now, it seems that the next release (dropping this weekend 🤞) will raise the limit, hopefully back to 90mph.

From issue #216FSD Beta 8.1 - 2020.48.35.7

I like the enlarged display and having the night mode, it is much easier to see the FSD visualization this way.

From issue #150FSD BETA 9.2 takes on narrow streets in the Berkeley hills

As someone who has driven on the hills of Berkeley, it is amazing to see the car handling these situations!

From issue #178FSD Beta drive in downtown Ann Arbor

Great video by DirtyTesla driving FSD Beta in downtown Ann Arbor. FSD Beta sees things before the driver does, handles lots of vehicles and pedestrians, and does well even on narrow streets (or narrow for US standards anyway). Still lots of room for improvement at roundabouts and turns, and as expected it requires quite a few interventions. I really like how it handles traffic lights and the little details like showing in the screen that it’s checking for traffic when turning right on a red light!

From issue #139FSD Beta drive without disengagements

Version 2020.44.10.2. No disengagements in this video by @teslaownerssv but some speed adjustments. Interesting reactions of FSD in some situations like in minute 4:23 (similar to what I would have done!).

From issue #139FSD Beta going to vision only in V9

More than 100 stalls coming to Harris Ranch. It currently has 13 stalls and this change will make this Supercharger the world’s largest one. Is it me or every couple of months or so we get a new world largest Supercharger now?

We are already in April, and that means that FSD Beta V9 should be released soon. According to Elon, it’s ‘Almost ready’. In this version, one of the main changes introduced is shifting to vision only and stop using the radar. In another tweet, Elon mentioned how when vision is good enough the radar doesn’t add signal, and the complexity of integrating it is too big for it to be worthy.

FSD Beta on Lombard street (Part 2)

One month and five updates later, FSD Beta handles Lombard street- the windiest street in San Francisco- way better than it did the first time Tesla Raj drove his Model 3 down the San Francisco landmark. Watch FSD Beta go from top to bottom of Lombard St. without interventions and without speed or turn issues! Definitely a lot of progress in such a short period of time.

From issue #143FSD Beta v9.2 turn testing and acceleration analysis

FSD Beta v9.2 got released last week, great commentary in this video by Chuck Cook.

From issue #177FSD follows navigation and completes a roundabout (UK) 📹

Check out minute 10.05 to see how Autopilot with firmware version 2020.16.3.1 handles a roundabout and follows indications to exit it all by itself. This AP version still doesn’t stop at stop signs and traffic lights. Just amazing. FSD is certainly not fully functional yet (same AP aborts entering a roundabout towards the end of the video), but seeing things like this makes me believe. PS. Lorry = truck 🚚

See more: YouTube

From issue #116FSD upgrade in AP2 cars

A few months ago, there was some speculation around if Tesla cars with AP2.0 would be able to upgrade to FSD or not. The answer is yes, and it can be done right now if you’re also getting the MCU2 retrofit. Reddit user ‘chillaban’ has shared his experience so you can read more about it here. If you’ve purchased FSD and are waiting for the Infotainment upgrade to be available, know that you can just schedule an appointment and request it. Most likely, you’ll get an appointment to get both done at the same time.

Read more: Reddit

From issue #113

Full-Self Driving beta on Lombard Street in San Francisco

What happens when FSD attempts the windiest street in the world? What happens when @tesla_raj and @minimalduck collaborate together? We get to watch great videos like this one!

From issue #136Giveaway Alert: Win a set of Performance Caliper Covers (Model Y/3)

To all Model 3 and Model Y owners, here’s a chance to win this very cool new set of Performance Caliper Covers for your Tesla (3/Y). RT and follow @tesletter on Twitter to participate. Good luck!

From issue #168Hidden Tesla detections

Greentheonly, of course, reporting some new hidden detections. In the video, we can see how AP detects and correctly tags the Tesla Model S and, for a fraction of a second, its color. In the past, Elon has said that they will be rendering other Tesla’s differently on the screen and maybe even doing a game, Pokemon style, to collect the different ones that you see.

From issue #165How many miles have you driven in AP?

Another Twitter promise, this time about a section to know how many miles have you driven with AP. It would be even cooler if they expose this via their API, I’m sure some folks would do cool things with that info!

From issue #111How Tesla Autopilot detects cars moving into your lane

Good video explaining how Autopilot works (and used to work) at detecting cars cutting into your lane. Worth watching!

From issue #77How to use Tesla Autopilot

In the last earnings call, Elon mentioned that the most common reason to visit a service center is to ask how Autopilot works. Well, Tesla Raj has published a great video describing how to use Autopilot for Tesla beginners.

From issue #71Human side of Tesla Autopilot

Professor Lex Fridman -we published one of his surveys a while ago- just released his paper on driver functional vigilance during Tesla Autopilot.

From issue #54HW2 anonymous snapshot (oldie but goodie)

Lukcyj was able to capture one of the anonymous snapshot/image/video uploads that got pushed out over his home network. So far, only partial success in getting anything useful out of it. One example is below.

Read more: TMC Forum

From issue #10Impressive demo of autolabelers in some raw video

Andrej Karpathy posted some videos of a new project they are working on. Pretty accurate detection! Oh, if you are into AI, Tesla is hiring https://tesla.com/AI.

From issue #192Increasing the max speed limit for AP Vision only

According to Elon, the next production version is going out next week and is changing the 75mph limit while on AP to 80 mph. Personally, I rarely go above 80mph when using AP, I assume this would be enough for most.

From issue #170Interesting thread by 'greentheonly' testing how AP avoids obstacles and pedestrians

The car did well in some of the experiments, not so much in others. Interesting thread!

We'll start with the ideal case. Car on AP, driving steadily when we encounter pedestrian firmly in our way.

— green (@greentheonly) December 6, 2019

We can see him from far away so we gracefully slow down, just like a real human! Perfect score! This happened 3 times out of 4. pic.twitter.com/w3ttt3ieAx

Intersection handling is coming but it will require HW3

According to Greentheonly, the Autopilot code today shows hints of the upcoming “intersections handling” functionality, but it will require Hardware 3. To be honest, it only makes sense that this kind of functionality will be part of FSD which requires HW3.

Read more: Twitter

From issue #102Is Tesla already using vision to replace ultrasonic sensors?

According to this vehicle owner, their 2018 Tesla Model 3 with all ultrasonic sensors disconnected is capable of displaying distances on the screen. It appears that Tesla is running this capability in “shadow mode,” likely using real-world data to train the neural network. During the owner’s tests, they placed tape on the cameras and observed that the car stopped displaying distances for nearby objects.

From issue #252Keeping humans safe on the dark streets

FSD Beta keeps getting better and better and sometimes can clearly see things that we, humans, have a hard time with. This is a great example of that!

From issue #215More about the Autopilot rewrite

This week Elon shared more about the FSD computer and the Autopilot rewrite they’ve been talking about for a while now: “Tesla FSD computer’s dual SoCs function like twin engines on planes — they each run different neural nets, so we do get full use of 144 TOPS, but there are enough nets running on each to allow the car to drive to safety if one SoC (or engine in this analogy) fails.”

From issue #133More FSD Beta videos [2020.40.8.12]

A new recap of short FSD Beta videos running the latest update 2020.40.8.12:

- Roundabout testing. Still not perfect but doing a pretty good job, all things considered!

- Avoiding road debris. I feel like FSD is definitely getting better at avoiding objects in the middle of the road. What do you think?

- Charging port open & close FSD beta new UI. The new UI changes coming with the FSD beta make the user experience more intuitive, for example, when opening and closing the charging port.

Neural Networks & Autopilot v9 With Jimmy_d

Jimmy_d does an awesome job at explaining Neural Networks and Tesla’s work on Autopilot to us mere mortals. Definitely worth listening!

Listen to the interview: TechCast Daily

From issue #32New 30 day Enhanced Autopilot trial!

Tesla just raised the price of AP if purchased after delivery to $8k but the new trial offers a promotional price of $5,500. If you try it and like it, buy it while it’s still discounted!

Disclaimer: We love our AP and think it makes road trips much more relaxed even though you still have to pay full attention to the car, traffic, etc.

Read more: Tesla

From issue #32New Autopilot visualization animation in 2019.28.3.7

People in the Early Access Program have now a new AP visualization when changing lanes.

The same version allows the driver to zoom in and rotate.

Cool stuff!

From issue #74New Tesla patent for 'Generating ground truth for machine learning from time series elements'

Tesla strives to achieve Level 5 of autonomy, therefore it is very important to correctly develop all the processes and methods that, as a result, will help the company achieve its goal. This new patent discloses a machine learning training technique for generating highly accurate machine learning results and it gets Tesla one step closer to their goal.

From issue #135New version of Vision Only AP re-enables a few features

If you remember the Pure Vision announcement when Tesla removed the radar from Model 3s and Ys in the US, it came without a few features that we have had in our Teslas for some time. Now, 2021.4.18.10 is restoring Emergency Lane Departure Avoidance, Smart Summon, and raising the max speed limit while in AP to 80mph.

From issue #170News about platform regulation in Europe

Seems that the European Commissioner and Elon had a chat, and hopefully this means that Tesla has a clear way of taking their Autopilot and FSD to Europe in the same way that it works in the US.

From issue #215Possible next speed limit 'match other traffic speed'

If you live in California, I’m sure you’ve been in the situation where, even going at or a bit above the speed limit, you’re still the slowest car on the road (go absurd speed limits!). Other times, depending on things like weather, traffic in other lanes, etc. it is just not safe to go at the speed limit of the road. In a response to a great video from our friend John (@teslaownerssv), Elon Musk has hinted at a future ‘match other traffic speed’ setting which would address those issues.

From issue #144PSA: Don't wiggle the steering wheel to stop the nag, apply force in one direction

Really good tip by wickedsight on Reddit, this is at least how I do it.

From issue #79Rumors of speed limit changes in an upcoming software update

As you probably remember AP on backroads and non-divided roads used to allow the driver to the next speed to +5 mph the speed limit. When Tesla introduced the Stop Sign and Traffic Light Control, they changed it so you couldn’t make it past the speed limit. Well, according to our friends at Teslascope, the +5 mph speed limit will be back in our cars very soon.

From issue #119San Francisco to Los Angeles with no interventions

Omar from Whole Mars Catalog just did a trip from San Francisco to Los Angeles with no interventions other than charging the car at a couple of superchargers. Really cool!

From issue #145See how Autopilot detects traffic lights

In this “what does Autopilot sees” tweet from Greentheonly, we can see how the neural net tries to detect traffic lights and how it makes predictions about going/stopping.

Hm, I think this is shaping in the right direction now.

— green (@greentheonly) February 11, 2020

Also seems the system is somewhat confused by the double-stacked traffic lights, thinks they are further away and the bounding box is all wrong too. pic.twitter.com/fXfBf9VInT

See how Autopilot will display Tesla vehicles

We talked about this last week, and this week we’re sharing a new renderization brought to you on Twitter by @greentheonly. The new visualization shows the Model S, 3, X, and Y how Autopilot would show them. @greentheonly also said that ambulances, fire trucks, police cars, and ‘construction’ vehicles will be coming, but not there yet.

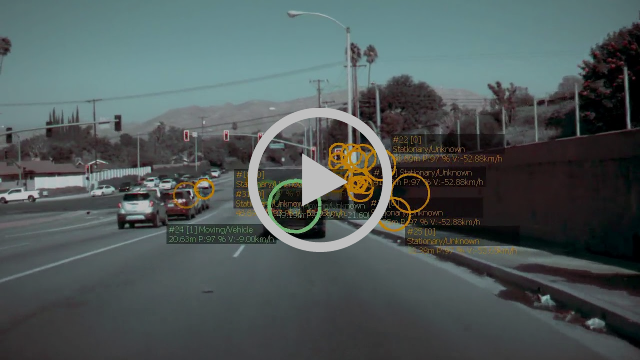

From issue #124Seeing the world in Autopilot

Here’s a couple of pretty cool videos showing a visualization of the Tesla interpreted radar data. The size of the circle indicates how close the object is while the color represents the type (green - moving, orange - stationary, yellow - stopped). It is neat to be able to see what the radar sees, great work verygreen and DamianXVI!

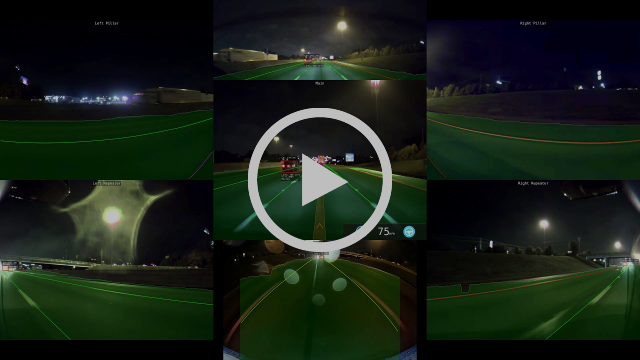

From issue #13Seeing the world in Autopilot V9 (part three)

If you’ve been following Tesletter or almost any Tesla forum or blog for sometime you probably already know who verygreen is (or greentheonly depending on the forum) and how much awesome information he is revealing from his car. This time he is back with a follow-up on what AP v9 seems. He has capture six of the cameras and the information that they detect. As always, this is awesome, great job verygreen!

In a second video we can see how AP can’t see a tire in the middle of the road, so please always be careful while using Autopilot!

From issue #30Seeing the world in AutoPilot, part deux

A few issues ago we included videos of how AP2 sees the world. Now, thanks to verygreen and DamianXVI there is a more up-to-date and better version. It’s pretty awesome to be able to see what the car sees, this is a rare opportunity since it isn’t a marketing video. We recommend you to watch the videos and read - at least - the original post since it has a lot of interesting info. A few highlights:

- AP identifies motorcycles, trucks, and pedestrians. Tesla doesn’t show them just yet but it is showing motorcycles in v9

- The car identifies lanes and turns with no actual road markings

- AP predicts how the road behind a hill crest is going to be

- While it doesn’t render 3D boxes, it detects vehicles, speed, and direction

- The car is continuously calculating all these, no matter if AP is on or off. Some of this state could be matched from the “triggers” to cause snapshots to be generated and sent back to Tesla. But verygreen doesn’t seem to think it actually compares this model to the actual driving input at this time.

Read more: TMC Forum

From issue #26Software & Autopilot History put into perspective

Great visualization created by CleanTechnica.

From issue #168Some SW internals of Tesla Autopilot node (HW2+)

Following up on his Tweetstorm, verygreen explained the software internals for AP2+. Since it is pretty hard to summarize it I would recommend you to go and read the whole thing on Reddit.

Read more: Reddit

From issue #47Speed limit recognition worldwide and roundabout support

Greentheonly was able to find new assets that hint at speed limit recognition coming to our Teslas. According to Green, we should expect this worldwide soon.

Check the Twitter thread to see the renders, interestingly some Tesla owners in the thread are claiming roundabouts being recognized as traffic signs and, in fact, check out the video below to see how FSD with 2020.16.3.1 handles entering an exiting a roundabout all by itself!

Read more: Twitter

From issue #116Stats of a 100 day/15k mile cross-country trip in a Model X

Every time I talk to someone who isn’t familiar with EVs or Tesla is ‘but … am I going to be able to go on road trips?’ Range and long-distance trips are a big question mark for a lot of future EV owners, but you can totally do it on a Tesla, and it’s not even a rare thing. u/octane097 shared their tip, 100 days, 15 miles, 70 Supercharger stops, and 95% of driving using AP led to… zero problems! The post has a lot of stats and the pictures of the route and the trip, check it out!

From issue #126Stop sign and traffic light stopping mode mass rollout is coming!

Elon Musk recently shared on Twitter that stop sign and traffic light stopping mode are coming soon to the US and a worldwide release will probably happen in Q3. E-X-C-I-T-E-D!

From issue #106Successfully negotiated 2 roundabouts in quick succession on Autopilot 28.3.1

Ok, it is a small one, no traffic and it just continues straight after the roundabout… but this seems like progress!

From issue #74Support for 'narrow spaces' on Autopilot is coming

According to Greentheonly, who has looked into the latest update 2020.36.10 a bit more in-depth than what the release notes say, support for ‘narrow spaces’ in the form of mirrors auto-folding in such places is coming. Not sure about this feature while driving but useful while parking for sure!

From issue #128Take me to San Francisco!

Just say “take me to San Francisco” and your Tesla will automatically get on the Bay Bridge, pay the toll, and drive all the way to San Francisco with 0 disengagements.

From issue #53Tesla AP urban environment 360-degree visualization

A visualization of the data eight autopilot cameras see when driving in an urban environment with cross traffic. Data for visualization was taken directly from an AP2.5 car.

From issue #39Tesla approved to sell Model S and Model X in Europe with Hardware 4 (HW4/AP4)

Tesla has been approved to sell the Model S and Model X in Europe with Hardware 4 (HW4) and Autopilot 4 (AP4). Tesla is expected to introduce the HW4 with the Cybertruck this summer, it’s unclear when that will make it to the Model S and X and to Europe, but at least they now are allowed to do it.

From issue #254Tesla Autopark now uses the cameras

Another discovery by Greentheonly. Only enabled by default for the refreshed Model S, although Greentheonly managed to enable it in his car. I hope Tesla releases it to the rest of the cars soon!

From issue #176Tesla Autopilot and Full Self-Driving Capabilities (Simplified View)

What are the differences between Autopilot, Enhanced Autopilot, FSD, and FSD Beta? Well, it isn’t that easy to explain, but this table offers a simplified easy view of it all.

From issue #222Tesla Autopilot avoids construction barrel in my lane

DirtyTesla (hi Chris!) posted this awesome video of his car (Model 3 with HW2.5 running 2019.40.1.1) avoiding a construction barrel that is literally in his lane. The screen doesn’t reflect the cone but as greentheonly tweeted this week, “cones are obviously detected on HW2+ just as a bunch of other stuff like traffic signs, traffic signals and so on, on HW2 none of this information is currently being sent to infotainment for visualization (there it used to do it in v9), on HW3 they send the cones info”

Tesla autopilot detecting stop lines

greentheonly is back at giving us a glimpse on what AP sees. As of 2019.4, we have stop lines detection enabled in public firmware.

From issue #49Tesla Autopilot HW3

verygreen has found new information about Tesla’s AP HW3 in the firmware. There is a lof of information for us to try to condense it here, if you like these type of things go ahead and read the entire post on TMC.

Read more: TMC Forum

From issue #41Tesla Autopilot miles

Check out this cool animated gif with Tesla autopilot miles since 2015.

From issue #15Tesla autopilot stopping at red lights all by itself

Greentheonly - who else? - just discovered that 2019.8.3 comes with the hidden ability of detecting and reacting to red lights and stop signs and activated it!. If you watch the video, it’s clear that this feature isn’t ready for prime time, but it is exciting seeing progress on this front! Look at 0:40 and 1:30 for two success detections and 4:05 and 5:12 for failures.

From issue #52Tesla Autopilot Survey (from MIT)

Lex Fridman (MIT) is leading an effort at MIT aimed at analyzing human behavior with respect to Autopilot by using computer vision and needs Tesla owners to help him answering this Tesla Autopilot Survey.

Read more: TMC Forums

From issue #3Tesla Autopilot Survey (from MIT)

Lex Fridman (MIT) is leading an effort at MIT aimed at analyzing human behavior with respect to Autopilot by using computer vision and needs Tesla owners to help him answering this Tesla Autopilot Survey.

Read more: TMC Forums

From issue #9Tesla Autopilot’s stop sign, traffic light recognition and response is operating in ‘Shadow Mode’

We’ve known for a while- thanks to greentheonly - that the current firmware includes stop sign and traffic light detection and reaction but it comes in disabled. Now, in the information released by Tesla about their Q2, we can read the following:

“We are making progress towards stopping at stop signs and traffic lights. This feature is currently operating in ‘shadow mode’ in the fleet, which compares our software algorithm to real-world driver behavior across tens of millions of instances around the world.”

Read more: Teslarati

From issue #70Tesla cameras may be reading speed limits soon

For a long time, the theory behind Tesla’s AP2+ not recognizing speed limit signs was the existence of a patent by Mobileeye. It seems like Tesla has been able to get around it since Elon Musk has recently said that it’s coming soon in a reply to Everyday Astronaut (@Erdayastronaut) on Twitter. Hopefully, Tesla will roll out a hybrid solution, we will see!

From issue #111Tesla could have to offer computer retrofits to all AP 2.0 and 2.5 cars

Several comments made by CEO Elon Musk since the launch of its AP 2.0 hardware suite in all Tesla vehicles made since October 2016 indicate that the company might have to update its onboard computer in order to achieve the fully self-driving capability that it has been promising to customers.

Now it looks like Tesla might have to also offer computer retrofits for AP 2.5 cars.

Read more: Electrek.com

From issue #7Tesla drives autonomously on a roundabout

It’s so cool to keep seeing Smart Summon getting better and better.

Watch how this #Tesla Model 3 safely takes a roundabout autonomously (via smart summon, not FSD...yet) : pic.twitter.com/DZCcrBqR4t

— TESLA_saves_lives (@SavedTesla) January 29, 2020

Tesla Enhanced AP saves owner

Marc Benton - well known Tesla community member - just recorded this while traveling from LA to Nashville.

From issue #52Tesla FSD stress-tested in Berkeley, California

Berkeley is a city with a lot of weird intersections (I have been able to experience them myself) so it’s a good place to challenge Tesla’s FSD (Beta 10) 😁 As a friend of mine told me, looking at the video, and being familiar with the locations shown, it does feel like FSD can be trusted. Nice progress!

From issue #148Tesla improves Autopilot visualizations by incorporating Some FSD Beta visuals

In the new update 2023.32.4, Tesla has borrowed some visualizations that were previously exclusive to FSD Beta and added them to Autopilot, making them more accurate and containing much more information than the previous Autopilot ones. In my opinion, eventually, all the UI will be the same (as long as hardware permits), regardless of whether FSD is activated or not; the difference will be that with FSD, the car will handle the driving.

From issue #285Tesla is nerfing Autopilot for Model S/X in Europe to comply with regulations

It is well known that Autopilot behavior is not the same in all countries since Tesla needs to adapt it to the regulations in the different markets. After the last software update 2019.40.2.2, Tesla has “reduced” the following Autopilot capabilities:

- Auto Lane Change in divided roads only, with a min. wait of 1.5 seconds

- Limited Autosteer

- Summon within 6 meters of your car only

- Reminder to touch steering wheel after 15 seconds

Read more: Electrek

From issue #90Tesla is now giving trials of the FSD

Tesla used to give trials of the EAP but it was unclear what was going to happen after they re-defined the packages to AP and FSD. Well, it seems like they just started giving trials of the FSD features (Auto Lane Change, NoA, and Summon) right now.

Read more: Twitter

From issue #57Tesla is rendering speedbumps now

I haven’t seen this in action, but great to see the software rending speed bumps and reducing the speed to 15mph. According to Greentheonly, they are also working on some sort of 3d terrain visualization, maybe to render potholes and others?

From issue #202Tesla Model S/X Builds spotted with new Autopilot cameras

Our friends from the Kilowatts found a new Model S and Model X with what seem to be new cameras, and here are some interesting highlights:

- The repeater cameras have a much wider angle.

- The rear camera has a bigger housing.

- The front and pillar cameras seem to have an upgraded lens.

All the cameras have a red hue on the lenses that could be indicative of an anti-glare coating.

From issue #256Tesla owners raging about AP on version 2018.48

Lots of Tesla owners reporting AP on 2018.48 is great.

“This is literally the first time I felt like my car can actually drive itself.”

“I feel like it now handles curves perfectly.”

“This update, even with AP2.5 hardware, made me really hopeful and optimistic about FSD. I didn’t really enjoy my NoAP experience too much before.”

We haven’t really had a chance to fully try it yet, but we’re very excited after reading all these comments :D

Read more: Reddit

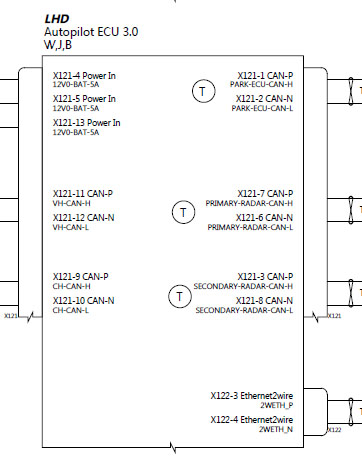

From issue #39Tesla reveals new self-driving Autopilot hardware 3.0 computer diagram ahead of launch

A source told Electrek that Tesla updated the Model 3 wiring diagrams in its internal service documentation on January 9th to include the new Autopilot 3.0 computer as the new standard ECU in Model 3.

An interesting new piece of information is that in the diagram we can see how the chip has connectors for a second radar although current models only have mounted a forward facing radar.

Read more: Electrek

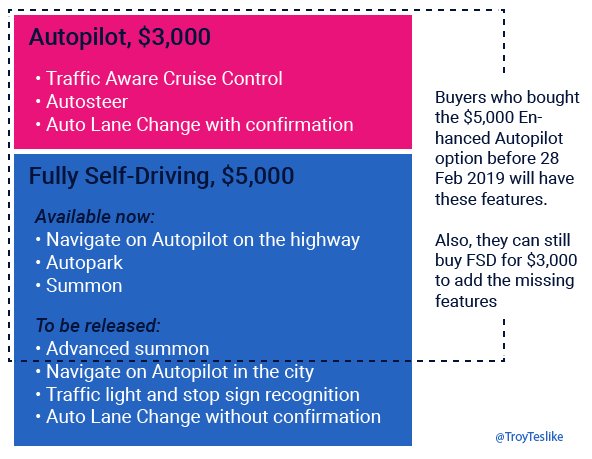

From issue #43Tesla shuffles the EAP and FSD definitions around

Tesla just changed the definitions going forward but announced that customers who already purchased EAP will still get those features even though now are only included in the FSD package. Thanks Troy Teslike for the visualziation

See more: Tesla

From issue #49Tesla spotted testing prototype with a new array of sensors 📷

Tesla has been spotted testing what could be a new array of sensors for Autopilot on a Model S test mule vehicle. While the FSD capabilities are constantly being updated via software updates, we haven’t seen Tesla update the sensor suite over the last four years.

Some say it would actually make sense that Tesla would be working on HW4 to address the blind spots and the 360 view, others (the source of the article), say it may be a Cybertruck prototype. If you ask me, while the rear cameras seem to match the ones of the Cybertruck, those repeaters are really high and I think Tesla may actually be testing the Semi’s Autopilot system.

From issue #118Tesla turned on redundancy in HW3

According to Greentheonly, in the version 2019.40.50.1, the B node in the HW3 board starts the full copy of the Autopilot software. This is important because if one node crashes, the car can immediately start using the other one for redundancy.

Read more: Twitter

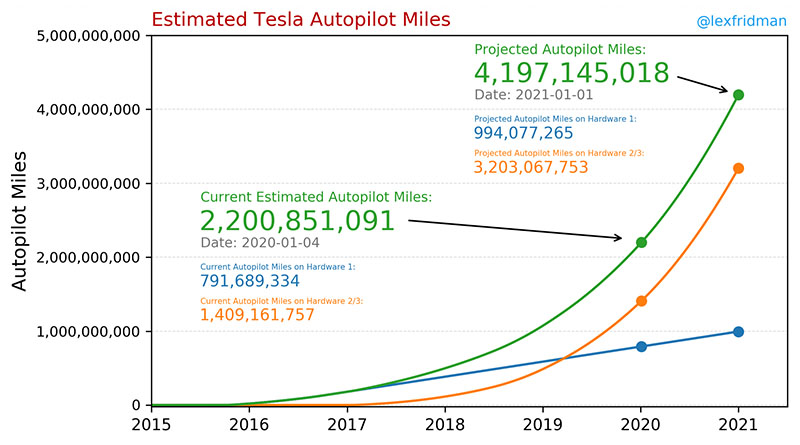

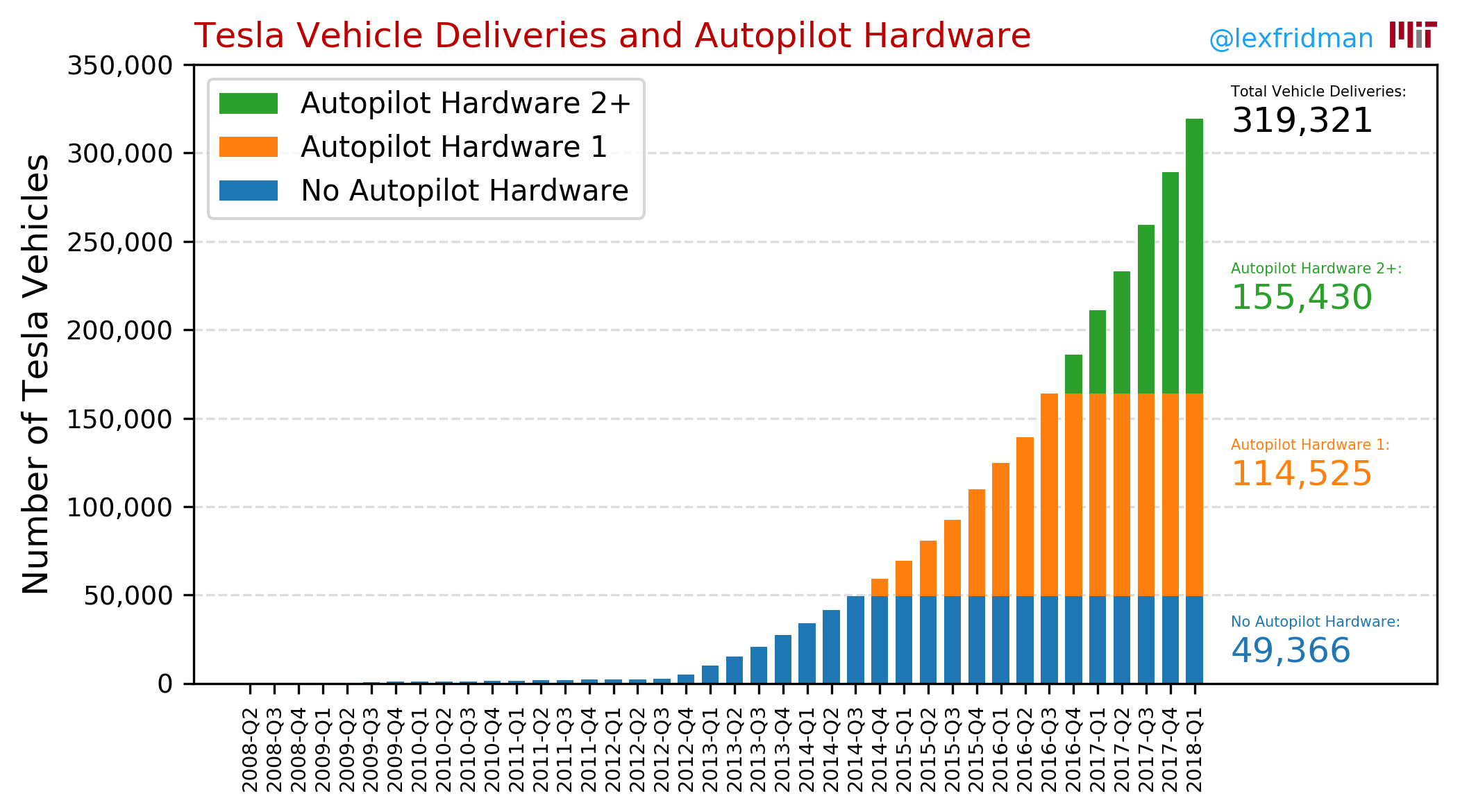

From issue #92Tesla vehicle deliveries and Autopilot mileage statistics

Interesting stats by Lex Fridman:

- 737,570 Tesla vehicles delivered with Autopilot hardware 2/3.

- Estimated Autopilot miles to-date: 2.2 billion miles

- Estimated miles in all Tesla vehicles: 19.1 billion miles

- He projects that in the year they will nearly double the number Autopilot miles

Read more: Blog

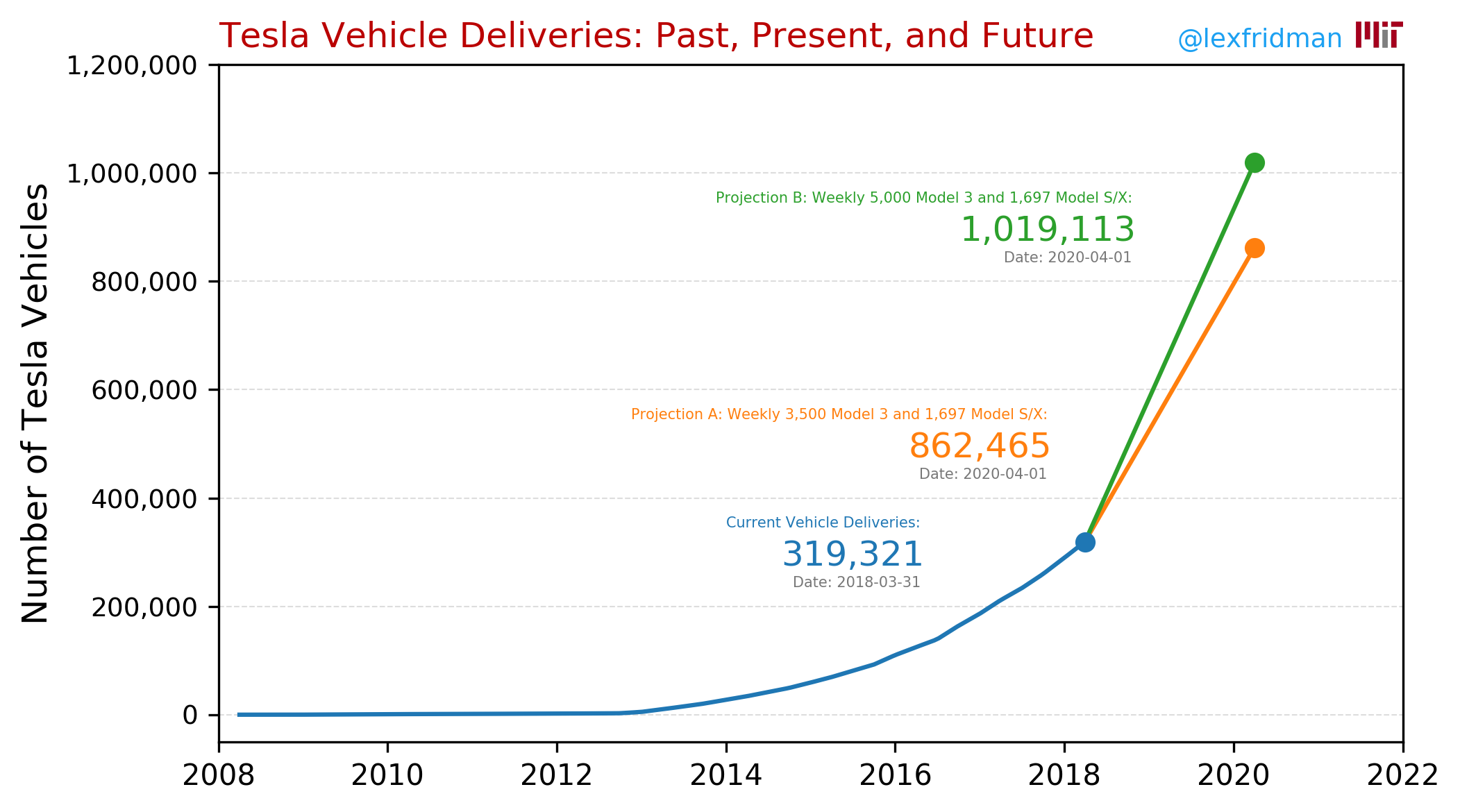

From issue #93Tesla vehicle deliveries and projections

In analyzing the Tesla subset of the MIT-AVT dataset, Lex Fridman said: “I came across the need to estimate how many Tesla vehicles have been delivered, and how many of them have Autopilot and which version of Autopilot hardware.”

The post was last updated on June 17th, 2018 and includes an FAQ section with questions received.

From issue #13Tesla Vision Update: Replacing Ultrasonic Sensors with Tesla Vision

First, it was the radar, and now Tesla is replacing the ultrasonic sensor, the rounded ones that you see on your bumpers, with pure Vision. Tesla wants to use their cameras and some type of memory of what saw, to understand the objects even when they are really close to the car. While the software transitions into that, new cars without the sensors will have limited or inactive park assist, auto park, summon, and smart summon. I personally don’t know if I like the change, it is hard to know objects that are inches away from the car with the new camera setup, and I haven’t seen Tesla using the concept of what was there to predict what is there yet, but I guess it should be doable.

From issue #236Teslas will soon talk to people if you want

Yeah, I don’t see why not 😂

Teslas will soon talk to people if you want. This is real. pic.twitter.com/8AJdERX5qa

— Elon Musk (@elonmusk) January 12, 2020

Tesla’s Dojo Supercomputer begins operations

After much anticipation, Tesla has officially launched its powerful Dojo supercomputer, representing a major milestone for the company’s technological capabilities. Tesla Dojo is a custom-built supercomputer optimized for neural network training and designed to process vast amounts of visual data for autonomous driving. The Dojo supercomputer will allow Tesla to accelerate the development of Autopilot and Full Self-Driving (FSD) technologies. It could also allow the development and deployment of more sophisticated AI algorithms, increasing the safety and reliability of Tesla’s autonomous driving technology.

From issue #275Testing stop sign and traffic detection and reaction

Greentheonly tested what happens when his Tesla encounters a stop sign that wasn’t in the maps that the car has. While the stop sign renders correctly on the screen, the car doesn’t react to it. If the sign is in the maps, the car beeps. My assumption is that this will change since detection seems to be working pretty well in the FSD preview. Greentheonly also discovered that if there’s a stop sign in sight, Autopilot refuses to engage if it was not already active.

Today they show the stop signs and traffic lights but they only act on these if the maps agree there's something. It's trivial to demonstrate: pic.twitter.com/mEZIjHImTh

— green (@greentheonly) January 13, 2020

The new neural networks (really well) explained

Jimmy_d made an awesome job at explaining to us mortals how the new Neural Networks pushed on 2018.10.04 work. The three main types he observed are called main, fisheye and repeater. “I believe main is used for both the main and narrow forward facing cameras, that fisheye is used for the wide angle forward facing camera, and that repeater is used for both of the repeater cameras."- he says.

Read more: Teslamotorsclub.com

From issue #1The next big FSD release may arrive in 6 to 10 weeks for Early Access

Elon Musk said he’s already driving the bleeding-edge Alpha version of FSD in his car and, in his own words, it’s almost at zero interventions between home and work. Elon mentioned this will be available to folks in Early Access in just a few weeks… in Elon’s Time™.

From issue #125There is no “unintended acceleration” in Tesla vehicles

Back in March 2019, we covered the tweets from Bonnie and Jason Hughes that explained how unintended acceleration events were just not possible. Now, Tesla has given their official explanation, much in line with that Jason explained back then, after some allegations were made to the NHTSA. Worth reading.

Read more: Tesla

From issue #95This is what Tesla collects in an accident on AP2+

greentheonly (aka verygreen in TMC) came into procession of two Autopilot units from crashed cars and was able to extract the snashots that the car stored during the crash. One of the crashes is an AP1 that crashed on July 2017, the crash snapshots only included 5 cameras: main, narrow, fishseye and pillars (2) at 1 fps, while the other crash is from October 2017 and its snapshop data includes 30 fps footage for narrow and main cams in addition to the data mentioned earlier.

Read more: Reddit

From issue #35TOSV founder talks about the latest FSD update

Our good friend John from Tesla Owners Silicon Valley was featured in an article talking about the club and the latest FSD Beta update. John has been one of the few people who have publicly been testing the FSD Beta and has put a bunch of videos out there to show the rest of us what the beta is capable of.

From issue #151Traffic light and stop sign control coming to EU

Good news for Tesla owners in Europe. Tesla has contacted folks who are part of the Early Access Program announcing they will soon push the 2020.28.10 update which contains the much-awaited Traffic and Stop Sign Detection new feature. This feature will only be available in cars with FSD (Hardware 3 retrofits have already started in Europe as well).

From issue #121TRAIN AI 2018 - Building the Software 2.0 Stack, by Andrej Karpathy

Tesla related stuff in this talk starts in minute 15:30. Some interesting bits:

- Since he joined - 11 months ago - the neural network is taking over the AP code base

- Tesla has created a ton of tooling for the people who tag images so they can be more efficient

- Around minute 20 he shows why labeling something that seemed easy like lane lines isn’t as easy as it seems

- Min. 22:30 - He shows traffic lights, some are really crazy!

- He talks about how random data collection doesn’t work for Tesla. For instance, if you want to identify when a car changes lanes and you collect images at random most often than not blinkers are going to be off

- Min. 25:40 - He mentions how auto wiper and how the dataset is crazy and it ‘mostly works’

Video of AP avoiding a tire on the road by itself

Check out Autopilot avoiding a tire in the road all by itself! Thank you @TeslaTested for sharing.

Was on Autopilot and a tire was in the road, the car steered around it without me touching the wheel. Truly incredible @elonmusk (don’t know why it said the video was deleted, please RT again) pic.twitter.com/6Phwwd2ym3

— Tesla Tested (@TeslaTested) March 21, 2020

Video of Autopilot avoiding a semi tire on the road

Check out this video of Autopilot taking over to avoid a semi tire on the road. The owner says AP took over ‘a split second’ before he reacted. In case you’re wondering, this is a Model 3 without FSD and Autopilot was engaged.

From issue #122What does the Autopilot rewrite really mean?

Interesting thread about the FSD rewrite. The main thing is without the rewrite, FSD cannot take unprotected turns in an intersection because it cannot build an accurate representation of the layout. Karpathy shared all about it earlier this year, I’m re-sharing the video here in case you missed it.

From issue #134What is factored into calculating your Tesla Insurance premium?

I think it’s pretty cool that you can save some $$ on the insurance premium based on driving behavior. Tesla Insurance is taking into account things like ABS activation, hours driven, forced Autopilot disengagements, and more, and in any case, the monthly premium will not change by more than 50% based on these factors. Curious to see how other insurance companies are going to try and compete with this.

From issue #167What Tesla Autopilot sees at night

verygreen is back, this time he is showing us what the AP2 running v9 sees at night. It is weird the glare the blinkers put on the repeater cameras. You can see it at 00:32 on the left repeater camera.

Read more: TMC Forum

From issue #34What's new in beta 2018.21

Tesla release versions odd numbers have historically been betas only pushed to a handfull number of users (3 - 5 according to Teslafi) under an NDA. Last time that a beta was rolled out was a few weeks before the NN rewrote, so we have high hopes that this one includes something big as well. The lucky ones who have rooted their Tesla are dropping some bits of info on TMC, see below (Thanks BigD0g and dennis_d for all the info!):

- Screen showing blind spot messages and detection (read more)

- Improvement on how AP handles road dividers (read more)

- AP2 running 2018.21 showing cars in adjacent lanes (ala AP1) (video)

Widening FSD Beta program (probably) next month

FSD Beta keeps improving and that is why Elon believes it will increase the FSD Beta participation next month, he also admitted that it needs more work.

From issue #216Workshop on Scalability in Autonomous Driving by Andrej Karpathy 📹

Mostly a rehash of stuff from the last talk he gave in February, but there’s some new details about speed limit signs that starts at 11:00 and also some impressive demos of the birds-eye view predictor handling complex intersections and lane lines at 19:45.

See more: YouTube

From issue #117